Adding and storing lyrics in MIDI files is a feature supported by the MIDI standard, allowing lyrics to be embedded directly within the MIDI data. This can be particularly useful for karaoke applications, live performances, or any scenario where the lyrics need to be synchronized with the music. Here’s how lyrics can be added and stored in MIDI files:

1. MIDI Lyric Meta Events

MIDI files can store lyrics using Lyric Meta Events. These events are a part of the MIDI standard and are specifically designed to embed text, such as lyrics, into a MIDI sequence. Each word or syllable of the lyrics is associated with a specific time in the track, allowing them to be displayed in sync with the music.

- Meta Event Type: The MIDI event type used to store lyrics is the Lyric Meta Event (0x05).

- Text Data: The actual lyrics are stored as text data within these events.

2. Software for Adding Lyrics

To add lyrics to a MIDI file, you typically use a MIDI sequencing or editing software that supports Lyric Meta Events. Here’s how you can do it:

Using Digital Audio Workstations (DAWs)

Some DAWs and MIDI editing software allow you to add lyrics directly to a MIDI track. Examples include:

- Cakewalk by BandLab: One of the most popular DAWs for handling MIDI lyrics. You can input lyrics directly into the MIDI track and align them with the corresponding notes.

- Cubase: Another DAW that allows the addition of lyrics via the MIDI editor.

- MuseScore: A free notation software that supports adding lyrics to MIDI files.

Steps to Add Lyrics in a DAW

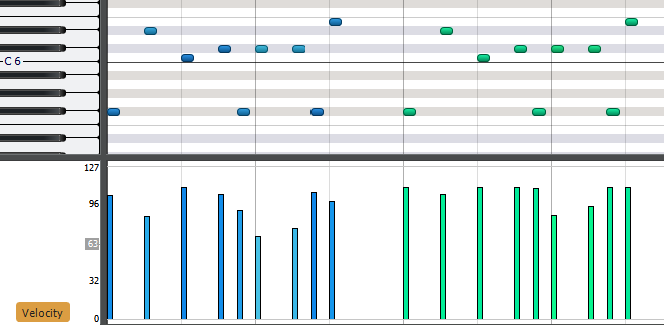

- Import or Create a MIDI Track: Start by importing an existing MIDI file or creating a new MIDI sequence in your DAW.

- Access the MIDI Editor: Open the MIDI editor in your DAW to view the MIDI events. There should be an option to add or edit lyrics.

- Enter Lyrics:

- In Cakewalk, for example, you would use the Lyric View to input lyrics, aligning each word or syllable with the corresponding note.

- In MuseScore, you can select the note where the lyric should appear, and then type the word or syllable.

- Sync Lyrics with Music: Ensure the lyrics are synchronized with the music. Each word or syllable should be associated with the appropriate note, allowing it to display in time with the music during playback.

- Save the MIDI File: Once the lyrics are added and synced, save the MIDI file. The lyrics will now be embedded in the file as Lyric Meta Events.

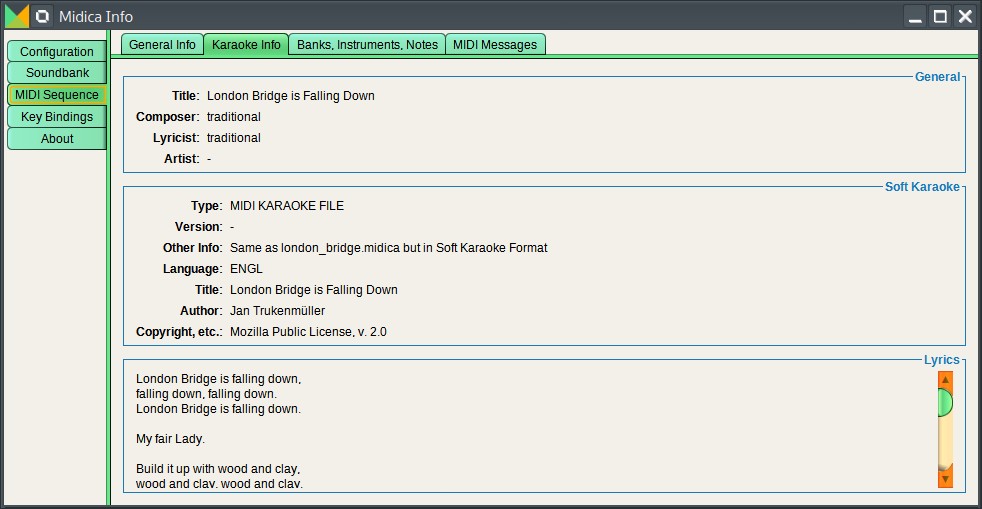

3. Karaoke MIDI Files

MIDI files with embedded lyrics are often used in karaoke systems. These files are typically referred to as MIDI-Karaoke or KAR files (MIDI files with a .kar extension).

- KAR Files: These are specialized MIDI files that include lyrics and other metadata designed for karaoke systems. Many karaoke software programs support these files and can display the lyrics on the screen in sync with the music.

4. Playback of MIDI Files with Lyrics

To view and play back the lyrics embedded in a MIDI file, you’ll need a compatible MIDI player or software that can interpret and display the Lyric Meta Events.

- MIDI Players with Lyric Support: Some MIDI players, such as vanBasco’s Karaoke Player, can display lyrics as the MIDI file plays.

- DAWs: Many DAWs that support MIDI lyrics can also display them during playback, allowing you to see how the lyrics align with the music.

5. Considerations

- Encoding: Ensure that the lyrics are encoded in a supported character set, usually ASCII or UTF-8, to avoid issues with special characters.

- Timing: Precise timing is crucial when syncing lyrics with music. Pay attention to the placement of each Lyric Meta Event to ensure they display correctly.

Conclusion

Lyrics can be added and stored in MIDI files using Lyric Meta Events, making it possible to synchronize text with music for applications like karaoke or live performance. By using MIDI editing software or DAWs that support lyric entry, you can embed the lyrics directly into the MIDI file, ensuring they play back in sync with the corresponding notes. This feature adds another layer of interactivity and functionality to MIDI, making it a versatile tool for music production and performance.