MIDI files are small because they contain instructions rather than actual audio data. Unlike audio files, which store detailed sound wave information, MIDI files store a series of commands that tell a synthesizer or computer how to generate sounds. Here’s a closer look at why MIDI files are so compact:

1. Data Type

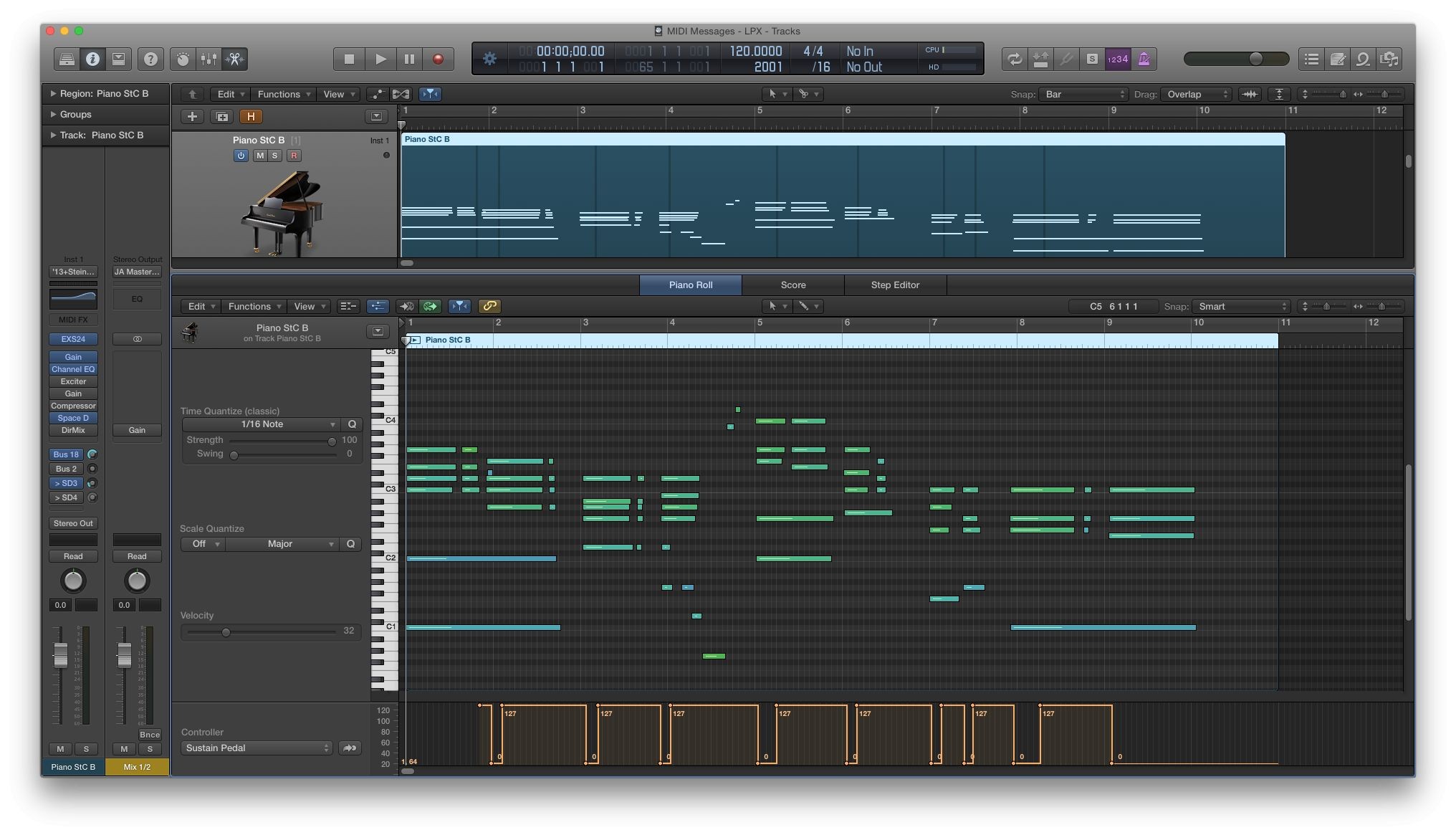

- MIDI Files Contain Instructions: MIDI stands for Musical Instrument Digital Interface. MIDI files contain instructions like which notes to play, how long to play them, how loud they should be, and which instrument should be used. These instructions are encoded as simple, compact data.

- No Audio Data: MIDI files do not store audio waveforms. Instead, they store numerical representations of musical events (e.g., “play note C4 with a velocity of 90”). This is fundamentally different from audio files, which store the actual sound waves as large sets of data points.

2. Efficiency

- Event-Based System: MIDI is an event-based system where each event (such as a note being played or a control change) is represented by a few bytes of data. For example, a “Note On” message requires only 3 bytes: one for the command itself (which note), and two for the note and velocity.

- Minimal Data Required: Because each MIDI event requires so little data, even a complex piece of music with multiple instruments and extensive control changes can be represented with just a few kilobytes.

3. Channel and Track Organization

- Use of MIDI Channels: MIDI files organize data into channels, where each channel can control a different instrument. Multiple channels can be managed within a single track, and all this information is packed efficiently into the file.

- Track Information: In MIDI Type 1 files, the data is organized into multiple tracks, but these tracks only contain the essential commands, which take up minimal space.

4. Absence of Audio Recording

- No Sound Recording: MIDI files do not record or store sound. They do not capture audio from a microphone or any other source. This dramatically reduces the file size compared to audio files like WAV or MP3, which store detailed information about the sound waves.

5. Repeatable Instructions

- Repetitive Commands: Many MIDI sequences involve repeated instructions, such as the same note or control change being triggered multiple times. MIDI efficiently encodes these repetitive elements without requiring additional storage for each instance.

6. Text-Based Information

- Inclusion of Lyrics or Meta-Events: Even when MIDI files include lyrics or other meta-events (like tempo changes), this data is still text-based and occupies very little space compared to the audio data.

Example of File Size Differences:

- MIDI File: A typical MIDI file for a song might be as small as 5–50 KB.

- Audio File: An equivalent audio file (e.g., WAV or MP3) of the same song could range from 5–50 MB, depending on the format and quality.

Summary:

MIDI files are small because they don’t store actual audio but rather the instructions needed to generate the audio. This event-based system, combined with the efficient encoding of musical commands, makes MIDI files extremely compact. The small file size is one of the reasons why MIDI is still widely used in music production, especially in scenarios where flexibility and ease of manipulation are important.